This article is Part 1 of a two-part series on how to address the algorithms created by social media companies that are designed to keep our younger generation addicted to their phones and computer screens, ultimately generating significant profits for these corporations. Part I provides the background necessary for understanding Part II. It highlights the federal government’s inability or unwillingness to regulate the algorithms used by social media companies that profit at the expense of children’s safety.

Part II discusses how state Attorneys General and private lawyers can leverage common law to regulate these algorithms. It is important to note that Part I does not delve into medical discussions on the topic. Instead, it presents general conclusions drawn from information available on the Internet, along with responses from ChatGPT, to provide context for the legal concepts discussed later.

How this article got started.

Several television stations aired a TikTok and Instagram video featuring a young teenager subway surfing on a New York City train. The news anchor explained that a parent of a child who was killed while subway surfing has filed a lawsuit against the two companies, seeking accountability for their role in promoting such dangerous activities. The parents claim that the algorithms used by these social media platforms encourage youth to engage in risky behaviors. In response, the companies deny any liability, stating that they promptly remove videos of this nature as soon as they are discovered. This situation highlights the potential harm that social media can inflict on our children, a concern that the government is seemingly overlooking. According to reports, New York City has experienced 18 deaths and 20 injuries related to subway surfing, with the average age of the affected children being just 14 years old.

This social media saga has historical roots in Congress. Assessing the harm caused to society’s youth by Big Tech is misguided in light of the fact that Congress enacted Section 230 of the Federal Communications Act, which grants immunity from suit to the tech industry for the Third-party content it publishes and for its content moderation practices. A significant portion of the Third-party content consists of videos, conspiracy theories, and personal attacks or shaming. This section grants immunity to Internet Service Providers when they publish this content, even for teaching children how to train surface, or even how to commit suicide.

Section 230 was enacted in 1996 to protect the fledgling tech industry. Now, thirty years later, technology is the largest industry in the world, with a market cap of $26 trillion. By comparison, the next largest U.S. industry by market cap is financials at $16 trillion. The U.S. budget is $6 trillion. Notwithstanding its massive size and wealth, Big Tech still enjoys liability protection from the federal government, a fact that demonstrates it operates with privilege in society.

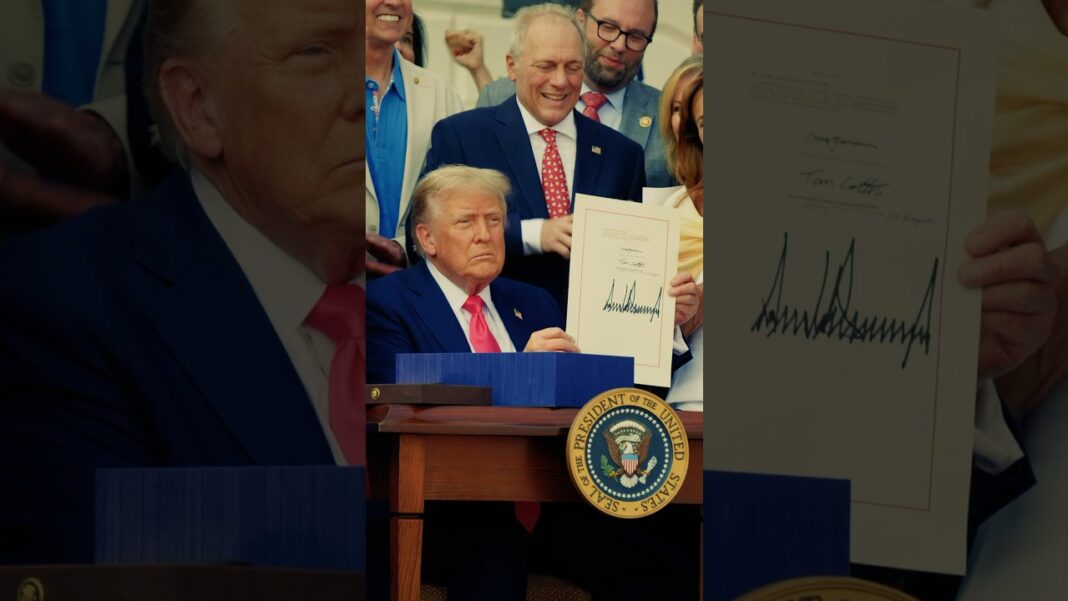

Another example of Big Tech’s power is the April 2024 law banning TikTok in the U.S. To demonstrate the lobbying power, Big Tech persuaded President Trump, almost immediately upon being sworn in on January 20, 2025, to direct the Justice Department not to enforce the law. Not only did the President nullify a congressional statute, but he is also currently negotiating the sale of Tic Tok to three major corporations: Oracle, Dell, and Walmart, all of which are close to the President. In this closed system, where social media is not held responsible for the harm it causes, many large tech companies have contributed millions to Trump’s presidential library, likely as a “thank you” to President Trump for dispensing favors.

The problem is that our social media cancer has already spread to the brain, and the time to act is now.

According to ChatGPT, social media is not just a tool, but a global phenomenon. It is widely used and generates trillions of comments and posts per year. Twitter alone churns out 500 million to 600 million comments and posts per day: worldwide, about 180-220 billion per year. Facebook posts 250 million photos per day, which generate approximately 5 billion reactions per day, totaling about 1.5 trillion per year. TikTok users watch more than one billion videos daily. These numbers are not just statistics, but a reflection of the pervasive influence of social media in our lives.

The harm social media is causing.

The harm has direct impacts on the mental health of our children and indirectly impacts their social behavior. ChatGPT identifies the harm as anxiety and depression, especially over bodily image and lifestyles. It causes addiction and produces dopamine, a chemical messenger that infects a person with a constant need to seek rewards, and enhances cyberbullying. Causing these negative impacts are algorithms that encourage outrageous behavior to generate more clicks, comments, and emotional reactions, ultimately benefiting the industry’s advertisers.

Can the algorithms be changed?

The answer to whether the algorithms can be changed is straightforward: yes, they can. However, the reason they are not equally clear: social media companies profit from every click, interaction, and market expansion. With a market capitalization of $16 trillion, these companies are raking in massive profits by targeting children. These social media companies make $2 billion marketing to children under twelve; $8.6 billion on marketing to children between 13 and 17; and $2 billion to children between 13 and 17.

Congress understands social media companies’ marketing practices targeting children can lead to harmful effects tied to company profits. However, federal officials also receive substantial campaign contributions by allowing these marketing practices to continue. In 2024, Big Tech spent over $60 million lobbying and contributed hundreds of millions to Trump’s presidential campaign and library.

At the federal level, it is very unlikely that changes to social media algorithms will occur. Our federal officials are unlikely to restrict their share of profits by regulating social media in ways that could harm their financial interests. Since the federal government will not step up to protect our children, citizens must turn to state attorneys general and private practice lawyers to find innovative ways to use the common law to address societal harm. Common law has been creatively applied since the year 1250 and serves as the foundation of Western law. There is an urgent need to leverage it today to tackle problems that the federal government is unable or unwilling to solve.

William L. Kovacs is the author of Reform the Kakistocracy, the winner of the 2021 Independent Press Award for Political/Social Change. Mr. Kovacs has served as senior vice-president for the US Chamber of Commerce, chief counsel to a congressional committee, and a partner in law DC law firms. He can be contacted at wlk@reformthekakistocracy.com