‘It is very much similar to pinning your confidential files on a public noticeboard and hoping no one takes a copy,’ a data protection expert said.

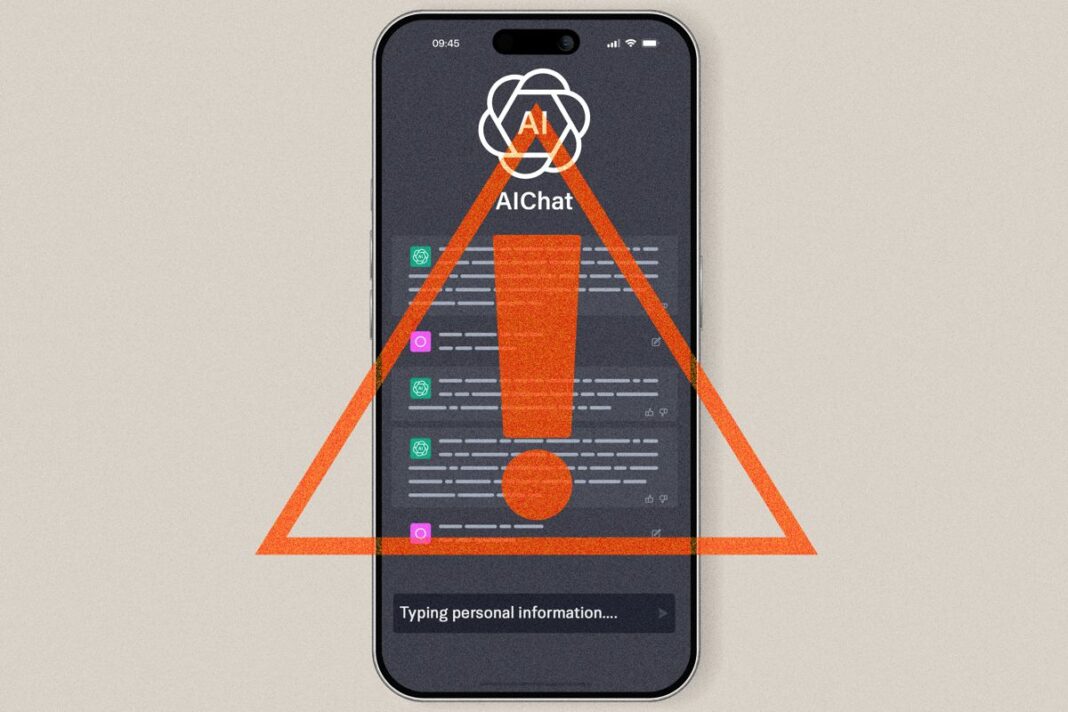

The world’s most powerful artificial intelligence (AI) models aren’t just changing how businesses crunch numbers or predict trends; they’re escalating concerns over data security.

As AI capabilities accelerate, cybersecurity and privacy experts warn that the same models capable of detecting fraud, securing networks, or anonymizing records can also infer identities, reassemble stripped-out details, and expose sensitive information, according to a report by McKinsey & Company released in May.

Ninety percent of companies lack the modernization to defend against AI-driven threats, a 2025 Accenture cybersecurity resilience report stated. The report included insights from 2,286 security and technology executives across 24 industries in 17 countries regarding the state of AI-cyber attack readiness.

So far this year, the Identity Theft Resource Center has confirmed 1,732 data breaches. The center also noted that the “continued rise of AI-powered phishing attacks” is becoming more sophisticated and challenging to detect. Some of the main industries plagued by these data breaches include financial services, health care, and professional services.

“It is the predictable consequence of a technology that has been deployed with breathtaking speed, often outpacing organizational discipline, regulatory provisions, and user vigilance. The fundamental design of most generative AI systems makes this outcome almost inevitable,” Danie Strachan, senior privacy counsel at VeraSafe, told The Epoch Times.

Because AI systems are designed to absorb an immense volume of information for training, this creates vulnerabilities.

“When employees, often with their best intentions, provide AI systems with sensitive business data—be it strategy documents, confidential client information, or internal financial records— that information is absorbed into a system over which the company has little to no control,” Strachan said.

This is especially true if consumer versions of these AI tools are used and protective measures are not enabled.

“It is very much similar to pinning your confidential files on a public noticeboard and hoping no one takes a copy,” Strachan said.

Multifaceted Threat

There are different ways someone’s personal data can end up in the wrong hands or be exposed by AI tools. Hacker-directed attacks and theft are well known, but another way this occurs is through what researchers call “privacy leakage.”

Publicly accessible Large Language Models (LLMs) are a common way for information to end up in a data breach or “leak.”